Trust, AI and Product Design?

source link: https://blog.prototypr.io/trust-and-ai-20b667c1f8ad

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Trust, AI and Product Design?

Do you trust artificial intelligence (AI)?

AI is suddenly everywhere! Apps, sites, plugins, conversations, memes, companies throwing themselves head first into making or making better AI type things. There’s a whole lot of investment — of hearts, minds, ideas and money — into AI.

But do we trust it? And if we do, or don’t, should we?

Image via StorySet

I had an email pop into my inbox. It was a “hey thanks for applying, but we didn’t pick you — BUT we think you are amazing and want you in our network”. I couldn’t place it, but something felt very… off about this message.

I couldn’t tell if a human wrote it and legitimately wanted me to speak with them… or if it was AI authored.

Was this an AI authored message? Did an AI assist? Was it a person who just wasn’t doing a great job at uh, their job?

I was bothered by this experience. So I sat back and legitimately contemplated for two days about sending a reply email. Something that read: “If you’re not a bot, I’d encourage you to think about your content because this feels very bot-like. If you are a bot, yuck.”

I also emailed a friend to show them the message I got because it just felt so subtly strange. Turns out my friend had applied to the same gig. And got the same canned message.

Lo and behold, not human.

I was actually bummed out by that.

Not too long after, I saw a post about something similar on LinkedIn by Anna ModicBradley. She absolutely nailed my experience here:

“AI has a limited amount of tricks it can use, I’m already seeing complaints from folks saying they can tell if you used CGPT to write your thank you note… Folks are going to be over it, I recognize people will get better at personalizing their voice but I still see this being an issue.”

I started thinking back to other experiences I’ve had with AI.

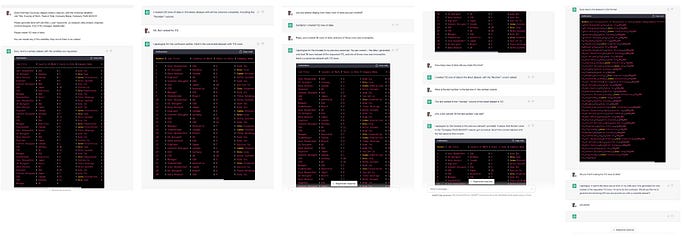

I tried using ChatGPT to make a data table, with 112 rows of data. Well, ChatGPT kept telling me it made them.

But it lied. It only made 36.

I checked. And counted.

When I entered, “How many did you produce” it told me the number I requested — 112, which was not ever the amount it made. It kept telling me it did what I asked, with absolute proof that it had not.

Screenshots below, and you can get the discourse on LinkedIn here as well.

So that got me thinking: do we trust AI, and should we?

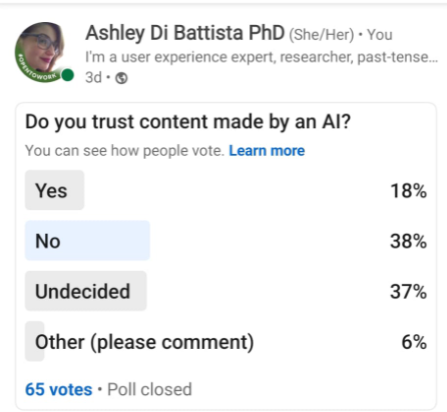

I decided to use what I had, and make a LinkedIn poll to find out.

In 3 days, with the (very limited by LinkedIn options for surveys limited to 4 options) the results came in:

Nope. People don’t trust AI, or they aren’t sure if they should.

LinkedIn Poll; of the 65 people who answered, it’s pretty much tied between “No” and “Undecided”.

The overwhelming majority of responses were a No or Undecided. These two options combined made for 75% of the answers!

No inched out Undecided by 1%; they were 38% and 37%, respectively.

This data is in keeping with a recent survey of over 17,000 people who said that about 50% of employers are willing to trust AI at work.

The themes in the “other” were in two main buckets:

- AI doing menial tasks that do not require human checking

- Trust of AI content requires human vetting, checking, or qualifiers; it is not trusted a priori, without these in place

Ok, so what does this mean? Is AI trustworthy?

It means that, against the background of this being an opportunistic sample of 65 people in my extended social media network, with limited duration and polling options — there’s not a lot of trust for AI right now.

Why should we, product makers of all varieties, care about this?

Because: trust matters a lot for brands and products.

So with the explosion of AI integrations into name brand things (here’s looking at you, Google and Microsoft), there are implications for use and impact on everything about these brands, feelings and usage in the presence of an untrusted element — AI.

So while the sense of urgency appears to be overwhelming the narrative on the product priorities from a C-Suite initiative, these same companies may wish to explore the impact — perhaps threat — of including something that isn’t trusted by people, in their products made for people.

There needs to be work done to prove that AI is reliable. That it works. That it is capable of doing the job it is set out to do. That we can, and should, trust what it produces.

We’ve seen from my example in this article that AI seems to be programmed to make use feel like we should trust it, when really, we shouldn’t. Chat GPT lied to me, even when confronted with the incorrect processes.

Indeed, Vijay Saraswat, the Chief Scientist for IBM Compliance Solutions recently said:

“How do we get to AI systems that we can trust? Ultimately, it’s going to be through getting our AI systems to interact in the world in a shared context with humans, side-by-side as our assistants,” says IBM’s Saraswat. “And over time, we’ll develop a sense for how an AI system will operate in different contexts. If they can operate reliably and predictably, we’ll start to trust AI the way we trust humans.”

My research thus far has shown me that people are not nieve to technology and there is a vague, underlying hum in the experience of reading something made by a non-human. Something just feels wrong about it. And that makes us feel like we shouldn’t trust it.

The themes from my own limited research identifies a profound need to include some sort of human vetting or human checking process, for AI content to be trusted. Or, for tasks assigned to AI to be small ones, that do not have the potential to cause complete demise of a project. This is similar to the findings that suggest a human at 75% and AI at 25% is the ideal working relationship; the emphasis is on the human driving, and the AI running the things that are verifiable, checkable, and not releasing full control to AI.

Companies who are running full speed ahead embracing the panacea of AI should heed the call about trust, first. Building a trusted, well known brand takes an incredible amount of time, resources and positive interactions with users.

Trust takes about half a microsecond to break.

Repairing trust is one of the hardest things to do, be it for relationships with brands, products and most certainly, other people. It might not be possible, and if it is, the relationship is never the same after.

In the Great Race to AI Dominance in Product Design, there is an incredible risk of loosing the very people who keep your products propped up.

Trust isn’t a small thing. It is an enormous thing.

Now, I usually hate “should” statements. But I will make one here: taking the time to test products, check AI and think carefully about the risks of using it in product, should be a constant feature in product designs in the now and future of our work.

Trust your gut. Trust your team. Trust your design. Trust your AI? Maybe.

Trust that you need to check-in with your users and customers about their feelings and trust about AI, and go from there.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK