The Road to All-Day XR Glasses

source link: https://avibarzeev.medium.com/the-road-to-all-day-xr-glasses-46063af34285

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Photo by Josh Calabrese on Unsplash

The Road to All-Day XR Glasses

Where are our consumer lifestyle XR glasses?

I’ve been working on aspects of XR, the Metaverse, and Spatial Computing for over 30 years, including helping or advising about ten different XR headset projects. I’ve been lucky to contribute to plans early on, most often by proving or disproving requirements and by defining key user experiences, before anyone spends a billion dollars building them. I’ve learned a bit about what works and what falls flat. Sometimes, the right answer is “not yet.”

I will avoid disclosing anything my past employers would still consider proprietary. I’ll link to a few older published patents, where they help teach us something — that is the actual purpose of patents, believe it or not. But they’re more often used by tech pundits as tea leaves for product plans. Alas, they only prove that some R&D happened and that company thought it was worth protecting. I’ll avoid some patents that I think would cause speculation.

Don’t take anything I say as evidence of product plans by any company or even as any criticism of anyone efforts. That’s not my intent at all.

For context, the first true XR experience I ever built was a CAVE, borrowing $250k in computers and giant projectors and spending another $30k on raw materials. Disney’s amazing $100k VR HMD from the 90s never shipped commercially, required cables from the ceiling to carry the weight. But several hundred thousand people got to try it.

By January 2010, I’d hoped we were ready to start the R&D for usable consumer XR glasses. It was time for a big shot in the arm for this slowly developing field. Google Glass and Magic Leap were also coalescing around the same time. Lucky for us, our small incubation team at Microsoft was asked to find new ideas for the next generation XBox, after the Kinect’s presumed mid-cycle boost (Kinect launched in the fall of 2010).

“Make it radical,” the XBox executives said. “Something that scares us.”

I had some “radical” ideas to offer. And our little team soon got to work a new product concept called “Screen Zero” for one screen to replace them all. I lead the tech exploration and helped define experiences in its first formative year. The reasons I left are not worth the space in this article. But, through the hard work of a thousand more people, HoloLens launched in 2016.

It was groundbreaking. But it’s still not an all-day consumer wearable device. Neither is the Magic Leap 2, or Snap Spectacles, or Varjo, or Quest today.

So what is it going to take to deliver all-day wearable AR glasses?

Maximalism vs. Minimalism

The maximalist approach, like HoloLens ultimately took, packs lots of sensors, algorithms and power into a high-end system. Once we understand the engineering and UX, we can theoretically shrink it down. But that takes a lot more time — up to a decade for some electronics to shrink in power alone.

Cambria and similar devices are so maximalist that they use big opaque VR displays plus multiple cameras to simulate AR with precise per-pixel control over the blending of reality and simulation. Maximalism is best for high-end applications and for doing core R&D. Some argue that they’re the only things that work right now, even if the applications are more industrial.

However, even the most expensive devices on the market today are not yet all-day wearable, not yet ready for ordinary social interactions, or even walking safely down the street. Some of the most Maximal maximal features, like true holographic or light-field displays, are not ready for consumer use.

At the other end of the spectrum, following the minimalist approach to consumer XR glasses today, are Amazon Echo Frames, Snap Spectacles and Ray-Ban Stories, among others. They pack whatever tech fits within the limits of wearable glasses right now, often ditching the display entirely.

Wait! Are non-visual glasses really XR?

If it augments a person’s perspective contextually, then I’d say yes. A podcast or music mix isn’t XR because it probably has no sense of you or your environment. Adding spatial audio (tracking your head motions) and cameras for AI could make it XR. A soundtrack tuned to your context would be too.

Minimalism can sell many more units in the near-term, most often by focusing on a smaller set of solutions and making those first class. If you get it right, as the WalkMan and iPhone did, you can sell billions. But don’t assume minimalism is easy. In many ways it’s harder to get right.

The Optimal Approach

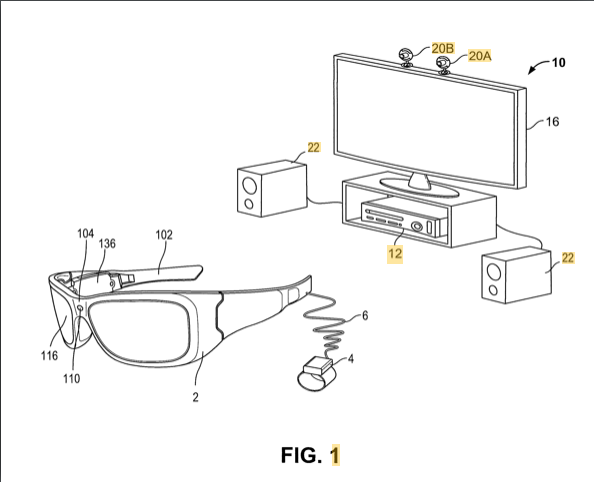

I personally wanted XBox’s Screen Zero to be a hybrid of minimalist glasses with a maximalist console in the loop. The glasses should be the size of a pair of Oakleys or smaller. A next-gen game console would do most of the heavy lifting for up to four pairs of glasses in the same room.

Image from a Microsoft patent on foveated and split rendering between glasses and console.

Ergonomics, Power and Heat

Why split up the work? It comes down to power; or more precisely: heat.

All of a computer’s work ends up as heat, and hopefully some helpful photons and/or mechanical actuations, like sound. How much heat? A light-weight pair of AR glasses can dissipate roughly one watt comfortably.

Your typical smartphone can dissipate around 10 watts,and afterward gets hot and throttles down. A console or PC can use 10–100x more energy than a phone and therefore 100-1000x more than glasses. Think tiny LED flashlight vs. big heavy clothes dryer. That’s a huge difference in workloads.

To balance the work, one needs solutions similar to what I started working on in 2010, such as data-fusion, split-rendering, optimized render-streaming. Eye-tracked time-warped rendering can make lower-bandwidth look great and cover for latency of communications but still lock 3D objects in space.

CPUs, cameras, displays and RAM take a lot of the power and generate a lot of the resulting heat. So the secret is to use very little of these most of the time. Think low-power custom hardware: new kinds of contextual sensors, ultra-lower power displays, and algorithms that can cleverly “wake up.”

This takes time to solve. For example, your PC mice and console controllers would drain their batteries if they didn’t throttle down. They all started off as wired. Today an optical mouse can last months on a small battery.

Beyond Living Rooms

To work broadly, optical see-through AR systems need to ideally block light from bright environments (even windows or lighting in living rooms), to better personalize and mix spatial audio with reality, to adjust optical focus, capture and recreate virtual holograms of other people and more.

Even splitting the device into console and headsets, there’s still too much “stuff” on the head. One big ergonomic challenge is getting rid of the giant strap that most XR devices still use (notable exceptions: HTC Focus, Lenovo ThinkReality, Tilt5) to lock the device to your face like a face-huging Alien.

Any kind of strap that people have to slip down and tighten over their heads will limit the diversity of the customer base (consider head size variability, real hair and sensitivity to mussing it up) and make the glasses less likely to be worn. That means the all-day device has to be ultra-light and generally fit like normal glasses.

Getting down to cool Oakley-sized glasses or smaller often means moving more of the system into a body pack or remote. Magic Leap shipped with just such a belt pack. Another XR device I noticed is shipping with some of the components sitting around a consumer’s neck. The more you split the work with a PC, “edge” or console-level hub, the less is needed on the body.

In 2010, I was personally more interested in the biceps as an anchor spot, with a short tether to the head if needed. This could hold most of heavier and the hotter parts away from the head and neck with a lot of surface area to radiate the heat away. Biometric sensors on the arms could also potentially detect hand gestures, similar to how Meta is reportedly using the Control+Labs devices to read hand gestures from the wrist. Back in 2010 it was the “Myo” band that Google later acquired through North, Inc. But it’s also reasonable for product designers to say “no wires,” not even short ones.

Focus

Because so many adults require correction for near or far vision, an all-day wearable form-factor typically needs needs to magnify and focus the real world for us, even when it’s powered down. Minimally, that means the lenses need to carry custom prescription optics. One company that Snap acquired for its waveguides had previously announced a partnership to embed these light-bending optics into functional prescription lenses, which is quite hard.

But is one prescription enough? Many adults need glasses only for reading or driving (far vision), meaning they’d need to swap their fancy “all-day” XR glasses out for a different prescription, at least some of the time. So do we have two or three pairs of expensive glasses? Or bifocals, or trifocals that bend light differently depending on where you’re looking? (practical, but not ideal)

I pushed for adjusting optical prescriptions dynamically to make the same pair of glasses viable for reading or driving, even for magnifying fine-print and far away signs. It also makes it easier for friends to try them out. Imagine if your XBox worked only for you and no one else in the room? Not much fun.

The current best approaches to dynamic focus include Alvarez (sliding) and liquid-filled adjustable lenses. Mechanical solutions tend to lower reliability. There is considerable R&D going into stacking special LCDs to change focus electronically. Meta bought a company to do that.

And then there’s also the question of focusing virtual imagery based on one’s current gaze. Avegant and Magic Leap have showed us ways to switch rapidly between two focal distances to simulate a simple light-field display, which is important for seeing “virtual stuff” in proper focus within arm’s reach. I’d earlier worked on a few ways to continuously sweep through focal distances. But commercial displays aren’t yet fast enough in practice.

Tracking your eyes can help process what matters, reduce the computational load, and provide more natural user input too. I became very familiar with eye tracking concerns and alerted policy makers to the risks early on.

Finally, on the business side, one company — Luxottica — enjoys huge profit margins selling cheap plastic frames and lenses with expensive brand names attached. It dominates the market today, and most of the brands you know. An XR glasses company has to partner with them or disrupt them, and neither option is easy. Facebook chose to partner with them on the Ray Ban glasses. Competitors include Warby Parker and other smaller players. You can’t sell a great new product without great distribution channels and partners.

Contrast

Magic Leap 2 offers a way to selectively dim the natural world. I started working onthis in 2010 and there’s still no perfect solution today. Many optical engineers still aren’t convinced it’s needed. Here’s why it is:

People widely get why transparent “additive” displays can’t render “black.” Black has RGB = 0,0,0, which adds literally nothing and is effectively invisible against existing light. However, we can easily trick you into perceiving blacks and shadows by proximity to brighter areas.

The real and harder problem comes when you take your XR glasses outdoors and look at a sunlit wall, perhaps near dark or shadowed areas. Some areas could be 1,000 to 10,000 times brighter than others. The contrast ratios are significant enough indoors to make AR visuals seem ghostly. Optical engineers often argue for pumping out more light to overcome this. Their optical systems are often only 1% to 10% efficient, meaning most of that light never even gets into your eyes and just adds more heat. You can’t design a system around optics alone, as heat is one of the biggest constraints, recall.

Here’s the reality. Any pair of transparent-AR or video-pass-through glasses needs to consider the real world scene when augmenting it. In the transparent case, glasses need to often subtract real lighting to attain the desired final colors. In the video-pass-through case, a display can replace whole pixels. But any transparency in the virtual 3D scene still requires blending it with background colors read in from cameras. So you’re basically looking at [energy-expensive] cameras and circuitry with either transparent or opaque displays. This is a huge design constraint, as it adds power and weight and also blocks the eyes.

Selectively blocking light with transparent glasses is ostensibly cheaper than either adding more display power or cameras. In 2010, I put a simple monochrome LCD in front of a waveguide. It worked as expected, rendering solid 3D objects with a soft black silhouette. But it has downsides, including a need for dynamic calibration and that the LCD distorts real light (mainly, refraction from control wires). It also has poor dynamic range, by itself. Outdoors, you want close to 100% opacity at times. Indoors, especially in social situations and remote telepresence, you want more transparency to see people’s eyes directly.

The main objection to this approach has been that the LCD or other spatial light modulator is typically out of focus, being only an inch from your eye. But even still, with a proper additive and subtractive transparent AR display and some fast lower-power sensors, your sunglasses could block the sun, glare, or headlights without dimming your view elsewhere. You could subtly darken the world to make a recommended book seem to glow brightly. And with more advanced subtraction (filtering), glasses could even re-color the world to ehhance any mood — e.g., warm up a gray winter day, light social interactions the way movies do, enhance night vision, and even provide biometric feedback when you’re getting upset or de-focused.

I’ve built various demos and spent a long time looking for better ways to do this. They all have some downsides. But the ML2 implementation gives me hope that the core problems will get solved.

Network

Radios take power too. So there’s always a tradeoff in distributed systems. The most promising future lies in using higher-end radio frequencies for both lower power and higher bandwidth than today. But the main challenge is that these frequencies don’t go through skin or walls (for better or worse). So the solutions need to be extremely clever — radio waves bounced and beam-formed around rooms and people— perhaps using more transmitters than today. This adds cost and complexity.

For the all-day wearable case, it also requires the network to exist first before shipping the product that depends on it. These limits are the single biggest reason companies have never shipped the split-rendering solutions I advocated for. 5G is closer to what we need, but in the US at least that mainly solves for lower latency and for more people using the network at the same time. We need more than just 5G, but it’s a good start.

To break free of the original “console” (or similar) in the room and still keep the form-factor small and light, we need a way for “edge” compute (think: shared computers in conference rooms, cell towers, or secure remote access to your home computers) to be combined in a way that doesn’t violate our privacy. It would be very worrying for anyone to ship their biometric sensor data up to any edge or cloud solution, as it can easily be exploited.

Cameras

Putting cameras in glasses is a tricky subject. Google Glass made a number of mistakes on social-acceptance and it suffered blowback. But Snap seems to have seen very little of that. Meanwhile Facebook is going long on capturing everyone’s lives in full detail, presumably to serve up more personalized ads, whether we want that or not.

Some camera uses are power-heavy, such as 3D scene digitization and digitally matting people or objects in or out. Continuously tracking your head in space is required for placing 3D graphics correctly, and cameras are still the leading solution. We’re getting better at throttling these uses down to one new frame every second, ten seconds, or less, using IMU [inertial measurement unit] sensors in-between, which are much lower power.

Snapping photos or videos is a fairly popular use case, especially if it can be more spontaneous and convenient than other devices. However, the quality of these photos is going to be lower than a typical smartphone, due to the more limited size and power. And putting a small white light on the frames is not enough to solve the complicated social permission issues.

Scene understanding is easier to imagine as a prime feature of camera-laden glasses, partly because it doesn’t have to ever produce photos of other people, and more so because it unlocks some of the most important new use cases for all-day wearable glasses: contextual understanding.

Experiences

The most important R&D I wanted to start in 2010 was using AR glasses with robust eye- and body-tracking to exploremore Natural User Interfaces for Spatial Computing — to take us beyond the age-old “rectangles in rectangles” of PCs, mice and traditional 1990s-style GUIs. While the hardware certainly has its limitations, widespread adoption of XR is waiting for someone to solve this experiential question of “why” and “how” do we all interact in the future? “3D Boxes in boxes” is clearly not it. So there’s ample work to do.

While Meta may be focusing their VR efforts on visuals that pass a so-called “Visual Turing Test,” all-day wearable XR glasses need to be more useful than the alternatives. Many people have imagined AR layers or channels that pervade our reality, labeling everything we see, adding information, telling spatial stories in 3D and repainting the world. While that will likely exist on demand, it’s not the common everyday experience I’m expecting.

Mostly, people want to improve on the kinds of things they do a lot: communicating, navigating, discovering the world around us, understanding and even changing the world in places, buying things, experiencing content and making money through their work efforts. For XR glasses to succeed, they need to do all that significantly better than we can do on smartphones or other approaches.

Here are some things a phone can’t do. Imagine a normal-looking pair of glasses that can adapt their focus dynamically and selectively block light. They can proactively and privately speak to you to without you typing or verbalizing queries. That alone would be a billion dollar product, before ever adding a display. These glasses can help you remember things, or provide trusted recommendations (vs. push ads) as part of your daily experience.

The most important research I’ve done on this is for async communication between people using non-visual XR glasses. Phones can certainly do voice and text well enough for today. But do they know when you’re trying to focus or pay attention? Can they help you switch contexts at the right time to remain in the flow of work or fun? That’s where these glasses can shine, using bio-sensors and other context (and assuming we can trust the makers).

All of what I’m describing is hard. And the tech barely exists today. It’s not on the minimalist track just yet, but that’s because we haven’t prioritized it over miniaturizing optics and maximizing field-of-view. But if you ask the question: what kind of XR glasses will succeed where others have not? I continue to think my list above would make a stellar product.

I’ll share more thoughts on XR experiences in a future post.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK