The Three Must-Haves for Machine Learning Monitoring

source link: https://dzone.com/articles/the-three-must-haves-for-machine-learning-monitori

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Machine learning models are not static pieces of code but, instead, dynamic predictors that depend on data, hyperparameters, evaluation metrics, and many other variables; it is vital to have insight into the training and deployment process to prevent model drift predictive stasis. That said, not all monitoring solutions are created equal. These are the three must-haves for a machine learning monitoring tool, whether you decide to build or buy a solution.

Complete Process Visibility

Many applications involve multiple models working in tandem, and these models serve a higher business purpose which may be two or three steps downstream. Furthermore, the model's behavior will likely be dependent on data transformations that are multiple steps upstream. Thus, a simple monitoring system that focuses on single model behavior will not capture the holistic picture of model performance related to the global business context. More profound knowledge of model viability only comes from complete process visibility – having insight into the entire data flow, metadata, context, and overarching business processes on which the modeling is predicated. For example, as part of a credit approval application, a bank may deploy a suite of models that assess creditworthiness, screen for potential fraud, and dynamically allocate trending offers and promos. A simple monitoring system might be able to evaluate any one of these models individually, but solving the overall business problem demands an understanding of the interlocution between them. While they may have divergent modeling goals, each model rests upon a shared foundation of training data, context, and business metadata. Thus, an effective monitoring solution will take these disparate pieces into account and generate unified insights that harness this shared information. These might include identifying a niche and underutilized customer segments in the training data distribution, flagging potential instances of concept and data drift, understanding the aggregate model impact on business KPIs, and more. The best monitoring solutions can also work not only on ML models but also on generic, tabularized data, allowing the monitoring solution to be extended to all business use-cases, not just those involving an ML component.

Image credit: Mona

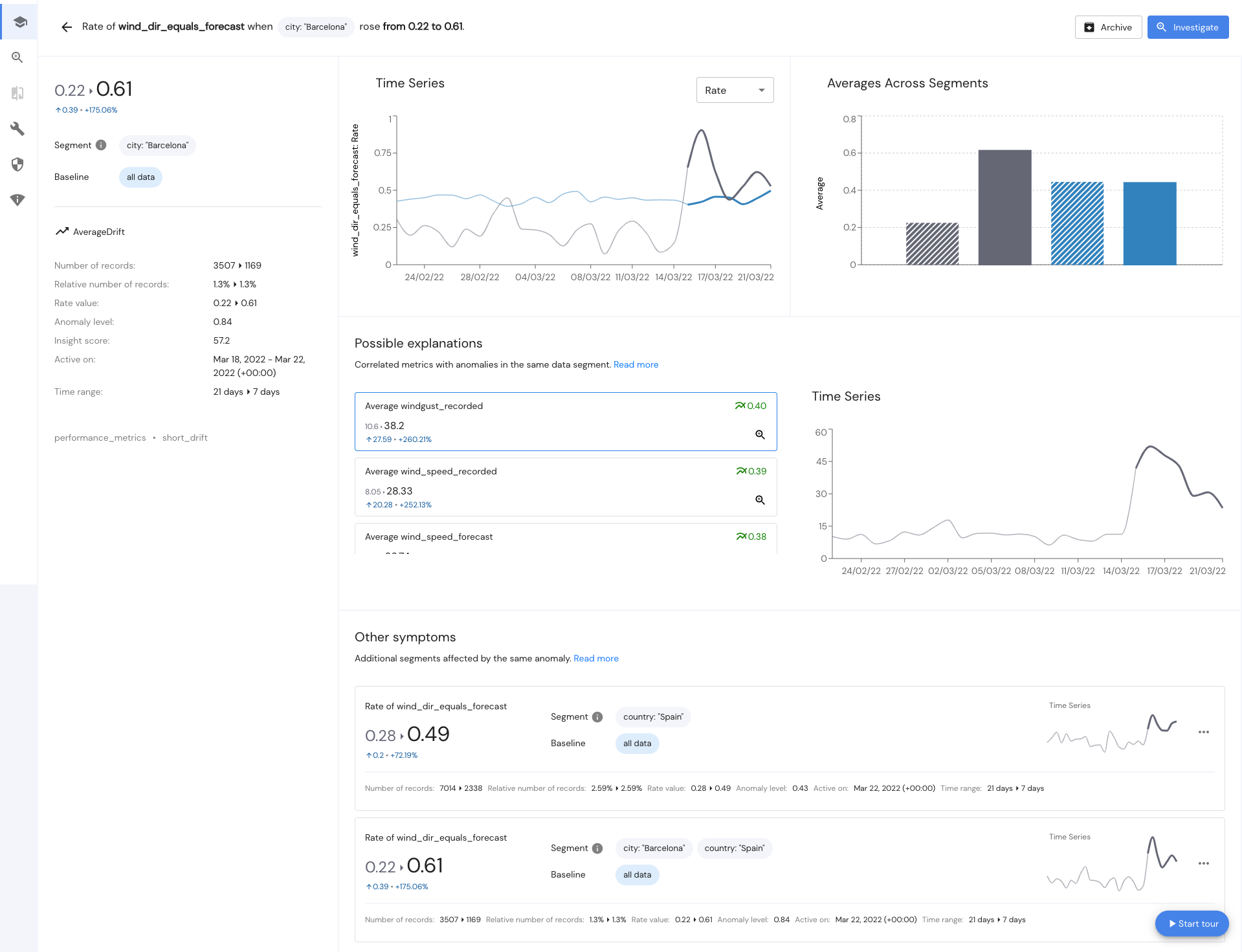

Proactive, Intelligent Insights

Any monitoring solution will allow you to understand how your model is behaving superficially, but that’s not enough in most cases. A common misconception is that a monitoring solution should act as a visualization tool displaying the common metrics associated with an ML model throughout the training and deployment process. While this is helpful, metrics alone are useless unless they can be employed to ground decision-making. Therefore, it’s not needed metrics but rather insights based on those metrics. A tool like Tableau, for example, might allow you to visualize your customer data. Still, a truly effective monitoring solution will be able to break that visualization down into meaningful segments and identify anomalous, non-performant, or outliers in some way. An accurate monitoring tool will automatically provide this sort of decision-making insight across any data type, metric, or model feature that it’s provided.

The meaning of “automatically” deserves some further elaboration here. Some monitoring tools will provide dashboards that allow you to manually investigate subsegments of data to see what’s performing well and what’s not. However, this facile introspection requires painstaking manual intervention and misses the more significant point. A true monitoring solution will be able to intrinsically detect anomalies via its mechanisms without external reliance on an individual to provide a hypothesis of their own.

Finally, a great monitoring tool will provide a way to handle noise reduction, perhaps by detecting single anomalies which propagate and cause issues in multiple places. The monitoring tool succeeds via detection of the root causes of issues, not by flagging surface-level data discrepancies or the like.

Image credit: Mona

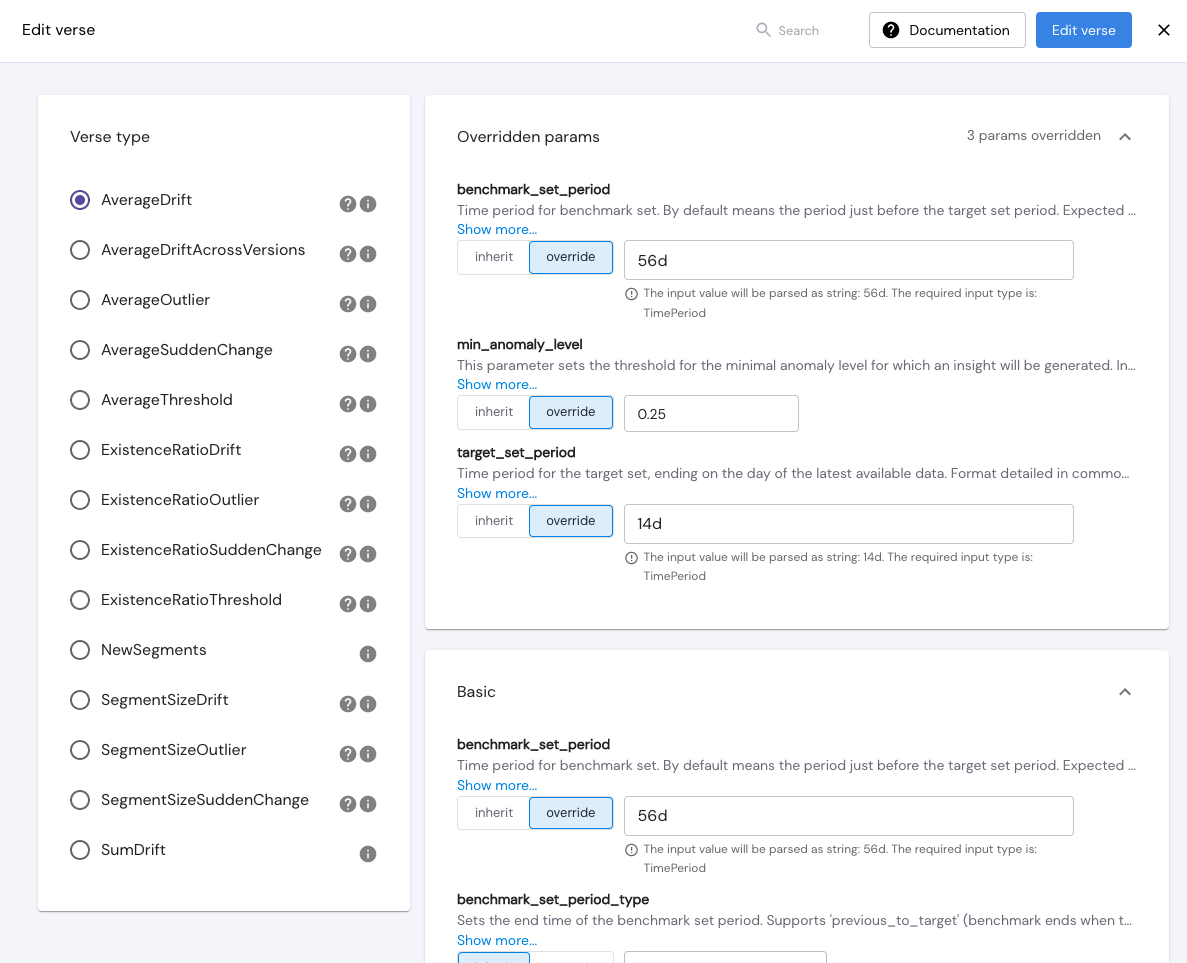

Total Configurability

Finally, a true monitoring solution will be configurable to any problem you can think of throwing at it. It should be able to take in any model metric, any unstructured log, and, really, any piece of tabular data and provide visualizations, process insights, and actionable recommendations based on that data. This is because different models have different needs, and a generic, one-size-fits-all solution will not work well in all cases. For example, a product recommendation system might be able to start effectively suggesting new products to users right off the bat as soon as it’s presented with data. For such a model, an ideal monitoring solution would likely want to focus on preventing model drift from the earliest stages, so metrics that assess model drift would be key prioritization points. In contrast, a fraud detection system might need a first-pass deployment in which it learns from tens or hundreds of thousands of real-world transactions before beginning to uncover a ground truth. For this type of model, some model drift would be expected and might even be desirable as it encounters new types of fraud and recalibrates itself. Thus, a monitoring solution that prioritizes insights into anomalous segments of the input data distribution would likely be better suited for this use case. The best monitoring solutions will be totally configurable, allowing them to be customized to the unique demands of the task at hand.

Conclusion

Given the ever-increasing hype around machine learning, many solutions will take an ML model and provide superficial insights into its feature behavior, output distributions, and basic performance metrics. However, solutions that exhibit complete process visibility, proactive, intelligent insights, and total configurability are rarer. Yet, these three attributes are key to squeezing the highest performance and downstream business impact out of ML models. Therefore, it’s crucial to evaluate any monitoring solution through the lens of these three must-haves and ensure that it provides model visibility and a more global and complete understanding of the business context.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK