How new aircraft automation are putting humans back in the loop

source link: https://uxdesign.cc/how-new-aircraft-automation-are-putting-humans-back-in-the-loop-d47afe62d1b7

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

How new aircraft automation are putting humans back in the loop

Human and machine abilities are not always opposite

While autonomous landing in aircraft has greatly improved since the 1960s, pilots still use manual controls to land their aircraft. How is that?

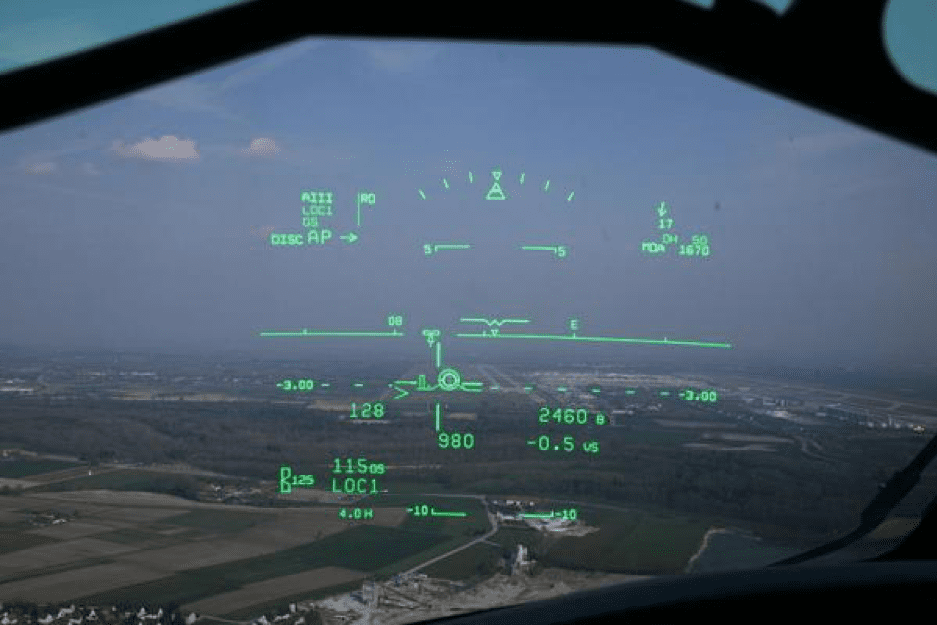

It all comes from one invention: head-up displays (HUD). By displaying data within the pilots’ vision, these interfaces put control back in the hands of humans. They intuitively provide information like trajectory, altitude, and position and ensure the guidance of their aircraft in all circumstances.

Paradoxically, these “mixed-reality” technologies automate the flying process while keeping pilots in the loop. And as they find applications in other fields, they have a lot to say about the evolution of automation and our jobs.

Here’s their story.

The history of airplane automation

When inventing airplanes, the Wrights brothers also invented the idea of the modern pilot: in active control, he or she deals with the physical uncertainties of open-air flight to guide their craft.

Yet, since their inception, and as new technologies have constantly augmented their jobs, pilots have always complained of missing or losing control of their craft.

In the 1930s, when cockpits were equipped with new instruments such as gyroscopes and altimeters, a pilot such as Beryl Markham already lamented the loss of skill they would bring.

After the war, newly-invented jet aircraft featured electronic guidance systems to manage stability and trajectory. And pilots also expressed their distrust of these devices, which were considered unpredictable “black boxes.” No one could know what this automatic guidance system was made of. But they eventually accepted them out of necessity.

Finally, in the 1960s, the difficult task of landing an aircraft in low visibility conditions required more advanced automation models. These automatic landing systems relied on inertial guidance and radio beams to locate the runway and land at the right angle.

But as they needed highly accurate signals from the landing base, they depended on the choice of the pilots to turn them on. And the fact was, they would generally favor landing with manual controls. So, besides that there are few circumstances where autoland can be applied, pilots were wary of these systems since they want to feel in control of the vehicle in such crucial operations.

Yet, while now airborne automation technologies seem to be moving unilaterally toward full autonomy, a new type of interface is giving power back to pilots. They provide them with unprecedented control over their landing process while automating their work. What are they exactly?

HUD, or the visual display that empowers pilots

During World War Two air conflicts, military aircraft originally had a visual reticle that indicated and guided the direction of the pilots’ fire. During the 1980s, these visual interfaces were implemented in commercial aircraft to provide a variety of flight data, without the pilot having to look down to find crucial information.

Yet, that was just the beginning of this technology. Noting the difficulty pilots have during landing, companies have since then modernized those standard displays to help them in this crucial phase of attention management. They have been designed to feature more navigation data within the pilot’s visual field, such as speed, trajectory, altitude, and inertia vectors.

Paradoxically, these computer display give the hand back to human pilots. With them, they remain the only ones responsible for aligning the guidance indications on their display and thus rightly positioning the aircraft on the runway.

Thanks to its ease of use, many airlines adopted HUDs and pilots accepted to play its interactive game. One of the main selling points of these solutions was to standardize the pilots’ landing methods, thus lowering aircraft maintenance costs. They also improve the safety of the operation, by making it more intuitive and predictable.

At the same time, pilots have appreciated being able to regain control of their aircraft and make the landing exercise more accessible. They developed more confidence in these hybrid automation solutions, which combine computer calculations with their flying skills.

As a result, similar visual synthesis technologies have found their way into other airborne applications and got equal success.

The future of human-in-the-loop automation

Realizing the potential of the HUD concept for pilots, companies have developed even more advanced visual synthesis technologies.

These modern synthetic vision systems can for example display a 3D model of the environment surrounding an aircraft, detailing terrain, obstacles, and weather. By providing pilots with more accurate visual information, it allows them to adapt to new airports or changing environments, such as under dust or snow. This is why they become well suited for both airplanes and helicopters, which are intended to land on more dynamic and steep terrain.

Now many pilots are putting their trust in this software to boost their situational awareness and land their aircraft in the best conditions. And they trust them even more since they can keep their hands on the commands!

Even further, these technologies are increasingly joining the trend of hybrid or mixed reality technologies. By combining automated visual models with human perception, Hybrid AR products make it possible for example to accelerate training within the user’s visual field.

The Microsoft HoloLens headsets, for example, already allow apprentice surgeons to practice the right gestures and workers to repair certain machines with the right movement patterns.

The benefit of these solutions is obviously to provide remote visual indications on precise tasks to employees. They only need to follow the commands within their visual field to learn how to solve new problems or be more productive in their process. And from in-house training to on-boarding of new users, to assistance in equipment rehearsal, the applications of these technologies are becoming numerous.

In other words, these human-in-the-loop solutions automate work like never before, but while workers keep their hands on their job!

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK