K3S with MetalLB on Multipass VMs

source link: https://blog.kubernauts.io/k3s-with-metallb-on-multipass-vms-ac2b37298589

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

K3S with MetalLB on Multipass VMs

Last update: May 22nd, 2020

The repo has been renamed to Bonsai :-)

https://github.com/arashkaffamanesh/bonsai

k3s from Rancher Labs surpassed recently 10k stars on Github from the Kommunity during KubeCon in San Diego and was GA’ed through the 1.0 release announcement and I’m sure k3s will play a central role in the cloud-native world not only for edge use cases and will replace a large amount of k8s deployments in the data center and in the cloud, or at least it will cross Rancher’s own RKE implementation in popularity.

This post is about how to extend k3s’ load balancing functionality with MetalLB for load balancing on your local machine or later on on-prem environments, on the edge or on bare-metal clouds.

This post in NOT about k3s, if you’d like to learn about k3s under the hood, please enjoy Darren Shepherd’s talk about “K3s under the Hood” at KubeCon in San Diego:

And don’t miss this great post “Why K3s Is the Future of Kubernetes at the Edge” by Caroline Tarbett.

k3s cluster on your local machine

k3s comes with traefik ingress controller and a custom service load balancer implementation for load balancing on k3s launched k8s clusters for internal load balancing of your microservices.

You can use k3d or this k3s deployment guide on Ubuntu VMs launched with Canonical’s multipass on your machine to follow this guide.

k3d is the easiest way to get k3s running on your machine, it uses docker to launch a multi-node k3s cluster within a minute on your local machine very similar to KIND and other solutions out there.

In this first post, I’m going to introduce a k3s deployment on multipass VMs on Mac / Linux with MetalLB for Load Balancing and shed some light on Ingress Controllers and Ingress with and without load balancing capabilities. By the way, this guide should work on Windows with some headaches most probably too.

In the next post, I’ll introduce the MetalLB integration with a k3d launched k3s cluster on your machine.

About Multipass

With Canonical’s Multipass you can run Ubuntu VMs to build a mini vm cloud on your machine, somehow similar to Vagrant with Virtualbox.

Multipass comes with cloud-init support to launch instances of Ubuntu just like on AWS, Azure, Google, etc.. using Hyperkit, KVM, Virtualbox or Hyper-V on your machine.

About Services, Ingress Controller, Ingress Object and Load Balancer and LB Service

Before we go through the easy k3s installation, let’s talk about services, ingress controller, the ingress object and load balancing and understand how the MetalLB Load Balancer implementation combined with Traefik’s Ingress and load balancer implementation on k3s works on your local machine or on a real multi-node bare metal environment.

In Kubernetes a service which is defined with the type LoadBalancer acts as an ingress -an entry point- to your service in your cluster, but it needs a load balancer implementation, since Kubenretes doesn’t provide an external load balancer implementation for good reasons.

MetalLB is a software defined load balancer implementation for bare metal / edge environments. Let’s discuss why ingress and not only with a LoadBalancer to provide the ingress functionality to our services running in a k8s / k3s cluster?

An ingress provides a mean to get an entry point to the services within a cluster, in other words an ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster, a service itself provides a cluster IP and does act as an internal LoadBalancer.

An Ingress handles load balancing at Layer 7 and the Ingress Controller creates ingress objects which defines the routing rules for a particular application using a host, path or other rules to send traffic to service endpoints on to the Pods.

Ingress is a separate declaration that does not depend on the Service type and with Ingress multiple Services and backends could be managed with a single load balancer ( → enjoy this article about Load Balancing and Reverse Proxying for Kubernetes Services).

A service defined with the type LoadBalancer is exposed with an external IP to the outside of the cluster (in most cases to the internet), this IP is not bound to a physical interface and is usually handed out through a switch / router via DHCP.

An Ingress without a load balancer service in the front is a Single Point of Failure, that’s why usually an ingress is combined with a Load Balancer in the front to provide High Availability with intelligent capabilities through the Ingress Controller such as path based or (sub-) domain based routing with TLS termination and other capabilities defined through annotations in the ingress resource.

As already mentioned k3s comes with the Traefik Ingress Controller as the default Ingress Controller, which allows us to define an Ingress object for HTTP(S) traffic to be able to expose multiple services through a single IP.

By creating an ingress for a service, the ingress controller will create a single entry-point to the defined service in the ingress resource on every node in the cluster.

The ingress controller service itself on k3s is exposed with the type LoadBalancer by k3s traefik service load balancer implementation of k3s and it scans all ingress objects in the cluster and makes sure that the requested hostname or path is routed to the right service in our k8s cluster.

With that said, k3s provides out-of-the-box ingress and in-cluster load balancing through built-in k8s services capabilities, since a service in k8s is an internal load balancer as well.

Nice to know

A k8s service provides internal load balancing capabilities to the end-points of a service (containers of a pod).

An ingress provides an entry point to a service defined through the ingress definition to the out-side world of a cluster.

A service defined with the type load balancer is exposed with an external IP and doesn’t provide any logic or rules for routing the client requests to a service.

A load balancer implementation is up to us or the cloud service provider, on bare metal environments MetalLB is the (only?) software defined load balancer implementation which we can use.

A MetalLB implementation without an ingress controller support doesn’t make sense in most cases, since MetalLB doesn’t provide any support to route client requests based on (sub-) domain name or path or provide TLS termination on the LB side out of the box.

k3s deployment on Multipass VMs

You need Multipass installed on your machine, head to multipass.run, download the multipass package and install it:

$ wget https://github.com/canonical/multipass/releases/download/v1.0.0/multipass-1.0.0+mac-Darwin.pkg

$ sudo installer -target / -verbose -pkg multipass-1.0.0+mac-Darwin.pkg

# verify the version

$ multipass version

multipass 1.0.0+mac

multipassd 1.0.0+mac

If you’d like to follow this guide, please clone the repo and run only the deploy-bonsai.sh script:

$ git clone https://github.com/arashkaffamanesh/bonsai

$ ./deploy-bonsai.sh

Before you run the script, you might like to know what you’re running, the 8-deploy-only-k3s.sh script includes 2 scripts:

1-deploy-multipass-vms.sh

and

2-deploy-k3s-with-portainer.sh

The first included script 1-deploy-multipass-vms.sh launches 4 nodes, node{1..4} and writes the hosts entries in your /etc/hosts file and therefore you need to provide your sudo password. Your /etc/hosts is backup’ed and the hosts entries will be copied in the hosts file of your multipass VMs as well.

The second included script ./2-deploy-k3s-with-portainer.sh deploys the k3s master on node1 and the worker nodes on node{2..4} and copies the k3s.yaml (kube config) on your machine, taints the master node to not be schedulable and labels the worker nodes with the node role, deploys portainer and finally prints the nodes and brings up Portainer in a new tab in your browser:

$ export KUBECONFIG=k3s.yaml

$ kubectl get nodesNAME STATUS ROLES AGE VERSION

node4 Ready node 144m v1.16.3-k3s.2

node2 Ready node 149m v1.16.3-k3s.2

node3 Ready node 146m v1.16.3-k3s.2

node1 Ready master 153m v1.16.3-k3s.2$ multipass lsName State IPv4 Image

node1 Running 192.168.64.19 Ubuntu 18.04 LTS

node2 Running 192.168.64.20 Ubuntu 18.04 LTS

node3 Running 192.168.64.21 Ubuntu 18.04 LTS

node4 Running 192.168.64.22 Ubuntu 18.04 LTS

The whole installation should take about 4 minutes, depending on your internet speed, at the end we’ll get something like this:

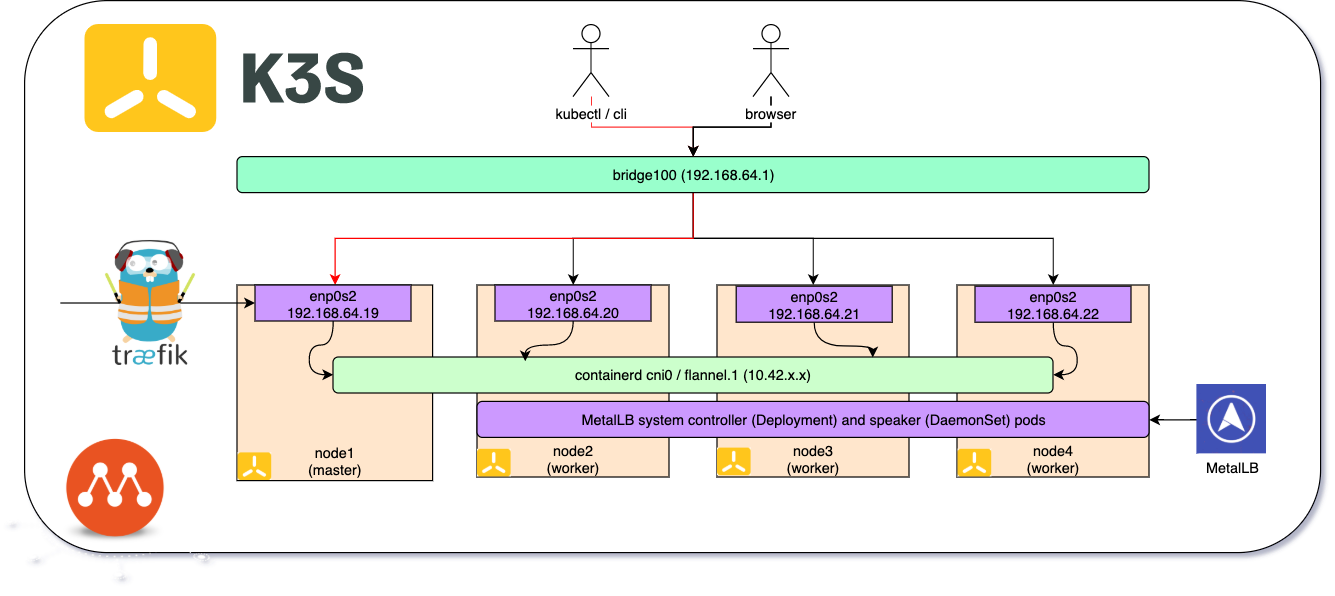

We access our local k3s cluster through kubectl or multipass cli or a browser. Multipass creates a bridge interface bridge100 with the IP 192.168.64.1 as a gateway to interact with multipass VMs, each VM gets an IP address from the range 192.168.64.0/24 which is our host network.

You can ssh into one of the multipass VMs with `multipass shell node1` and run ifconfig to get the network interfaces. k3s deploys flannel as the overlay network and uses containerd as the container runtime.

The cni0 is the container network interface which acts as a bridge within the VM to route the traffic over the flannel network interface for pod to pod communication. The veth* interfaces are virtual interfaces attached to the pods running in the VMs.

To find out which components k3s is running, you can run:

$ kubectl get all -A

As we can see traefik has a deployment with a single pod which is the traefik ingress controller with the default backend. The svclb-traefik-* pods are deployed with a DaemonSet svclb-traefik and the traefik service is exposed with the type LoadBalancer and the IP 192.168.64.20 in my case (node2):

Let’s ssh into the master node and a worker node and see what we’re running:

$ multipass exec node1 -- bash -c "sudo crictl ps -a"

$ multipass exec node2 -- bash -c "sudo crictl ps -a"

As we can see the lb-port-* containers, which belong to the svclb-traefik pods are running on the master and worker nodes and are responsible among others to keep the ports accessible from outside the cluster for ingress or a service of type load balancer. The traefik container has only one replica and runs in one of the nodes (in my case node2).

As Mark Betz explains in his great blog post series “Understanding Kubernetes Networking”:

A service of type LoadBalancer has all the capabilities of a NodePort service plus the ability to build out a complete ingress path, assuming you are running in an environment like GCP or AWS that supports API-driven configuration of networking resources.

This is true, but for Bare Metal environments and our k3s home lab we can use MetalLB to provide load balancing within Kubernetes and build an ingress path to our services by using a dedicated LoadBalancer IP handed out by MetalLB for each service.

Let’s see how it works, we create first the whoami deployment, the service with ClusterIP and the ingress.

$ kubectl apply -f whoami-deployment.yaml

$ kubectl apply -f whoami-service.yaml

$ kubectl apply -f whoami-ingress.yaml

$ kubectl apply -f whoareyou-service.yaml

As we can see the whoami pods are running on node2 and node4:

In ingress (whoami-ingress.yaml) we defined node3 as the host, the IP address which is shown in this case is coincidentally the same IP of the node3 (this might not be the case on your env.):

Now we can call the service with `curl node3`:

multipass-k3s-rancher $ curl node3Hostname: whoami-deployment-7bd5df5b9b-vf95g...Host: node3X-Forwarded-Host: node3X-Forwarded-Port: 80X-Forwarded-Prefix: /X-Forwarded-Proto: httpX-Forwarded-Server: traefik-65bccdc4bd-kghp4X-Real-Ip: 10.42.2.0

If we repeat the curl command, you’ll see that you’re hitting each time another container on a different host, which means we have a kind of load balancing through ingress, but the load balancing comes through the service and not the ingress.

Our service has a ClutserIP and it’s not exposed via NodePort or with the type LoadBalancer yet:

If we stop node3 to simulate a node failure, obviously we can’t call the service with `curl node3`, which means ingress is a SPOF (Single Point Of Failure)!

In such a case we could edit the ingress and change the node host to another node, e.g. node2 and get access to the service again, but this means in production environments, we’d face a downtime.

Fine, now let’s see how MetalLB can help and deploy MetalLB with:

$ kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.8.3/manifests/metallb.yamlMetalLB Layer2 ConfigMap

$ kubectl create -f metal-lb-layer2-config.yaml

We’re using the layer2 mode here, since the BGP mode needs an external network router. In the ConfigMap we define the desired address-pool (IP range) for our load balancer IPs.

As soon MetalLB is deployed with the layer2 config, the traefik LoadBalancer External-IP address will change, in my case it changed to 192.168.64.23:

This IP is handed out by MetalLB controller and a DHCP service provided by MetalLB (I guess) and is not bound to an interface and is a VIP (virtual IP) which routes external traffic through kube-proxy and not through the traefik internal load balancer (?).

As we can read on the MetalLB Layer2 mode documentation page:

In layer 2 mode, all traffic for a service IP goes to one node. From there,

kube-proxyspreads the traffic to all the service’s pods.In that sense, layer 2 does not implement a load-balancer. Rather, it implements a failover mechanism so that a different node can take over should the current leader node fail for some reason.

If the leader node fails for some reason, failover is automatic: the old leader’s lease times out after 10 seconds, at which point another node becomes the leader and takes over ownership of the service IP.

This means even with MetalLB in layer2 mode, we’ll have a SPOF in case the node where the MetalLB controller is running goes down.

Now let us edit the whoareyou-service and define the service type as a LoadBalancer:

$ kubectl edit svc whoareyou-service

# change the type from:

type: ClusterIP

to

type: LoadBalancer

and set the type to LoadBalancer, with that the whoareyou-service will get an EXTERNAL-IP of 192.168.64.24:

And after that we can call the service with:

$ curl 192.168.64.24

Hostname: whoareyou-deployment-7db6694b98–8724x

IP: 127.0.0.1

IP: ::1

IP: 10.42.1.5

IP: fe80::7c54:cfff:fea2:9505

RemoteAddr: 10.42.3.0:61432

GET / HTTP/1.1

Host: 192.168.64.24

User-Agent: curl/7.64.1

Accept: */*

To be honest I don’t know yet how this magic works behind the scenes exactly, if you know, please provide a comment here or join us on slack, we love to learn from and together with you!

Running Rancher Management Server on k3s

If you’d like to run Rancher Server on k3s to manage a fleet of k3s servers, run:

$ kubectl delete ingress whoareyou-ingress

$ ./3-deploy-rancher-on-k3s.sh

And enjoy :-)

Welcome to Rio on K3s!

By one of the next posts, I’m going to discover Rancher Labs’ Rio. If you’d like to give it a try on this K3s implementation on Multipass VMs, run:

$ curl -sfL https://get.rio.io | sh# adapt the IP addresses below with your worker nodes IPs$ rio install --ip-address 192.168.64.20,192.168.64.21,192.168.64.22# take a coup of coffee and come back!$ rio dashboard

And enjoy, you'd love it!

Credits

Many thanks to Darren Shepherd and the great folks at Rancher Labs who are making the world a better place with 100% Open Source products and solutions like K3s, Rancher, RKE, Longhorn, Submariner and now with Rio.

And thanks to the whole community and in special to Lennart Jern for his advice by this great discussion which encouraged me to write this post.

We’re hiring!

We are looking for engineers who love to work in Open Source communities like Kubernetes, Rancher, Docker, etc.

If you wish to work on such projects please do visit our job offerings page.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK